Most of us are used to interacting with generative artificial intelligence models through their AI assistants. I’m referring to popular applications such as ChatGPT, Gemini, Copilot, Claude, Le Chat, and Meta AI. You install them on your mobile phone or computer, or access them from your browser, and have a written or voice conversation so that they can answer questions, suggest ideas, and perform other varied tasks. But AI professionals or experts need to get more out of them. Or to have more control. And to do that, they have two options, in addition to using the assistants: access the API of these AI models or install them on their computers. Yes, you can install artificial intelligence on your PC.

First of all, it should be made clear that not all artificial intelligence models can be installed on your PC. The main reason is that their owners do not allow it. They are within their rights. So you can only use them online by connecting to their servers. But there are open-source models, with free licenses, that do allow you to download and install them. The main ones are Llama (from Meta), DeepSeek, Phi (from Microsoft), Gemma (from Google), and Mistral. If you have a powerful computer and want to tinker with artificial intelligence, or use it offline, tools such as Ollama or LM Studio make this task easier.

Using artificial intelligence through its official assistant has its advantages. The main one is that it works over the Internet, so you don’t depend on the processing power of your phone, tablet, or computer. And you can search for current information. The disadvantage is precisely that this connection leaves a trail. So you cannot be completely sure that your conversations with the AI won’t be accessible to third parties. On the other hand, installing artificial intelligence on your PC has the advantage that you can use it without an Internet connection. In addition, you can bypass its restrictions and have more control over the AI. The disadvantage, however, is that you need a powerful computer with enough RAM, a good GPU, and storage space. And by default, it will not perform online searches unless you configure the AI to do so.

Installing artificial intelligence on your PC with Ollama

Although the process of installing an LLM model, what we call artificial intelligence, can be done manually, tools such as Ollama or LM Studio make the process easier. For this occasion, we will choose Ollama. It is one of the most recommended, and although it does not have a graphical interface and works in command-line mode, it is very easy to use. It is also free and open source. And you can install this tool on Windows, macOS, Linux, or a Docker image on a server or NAS.

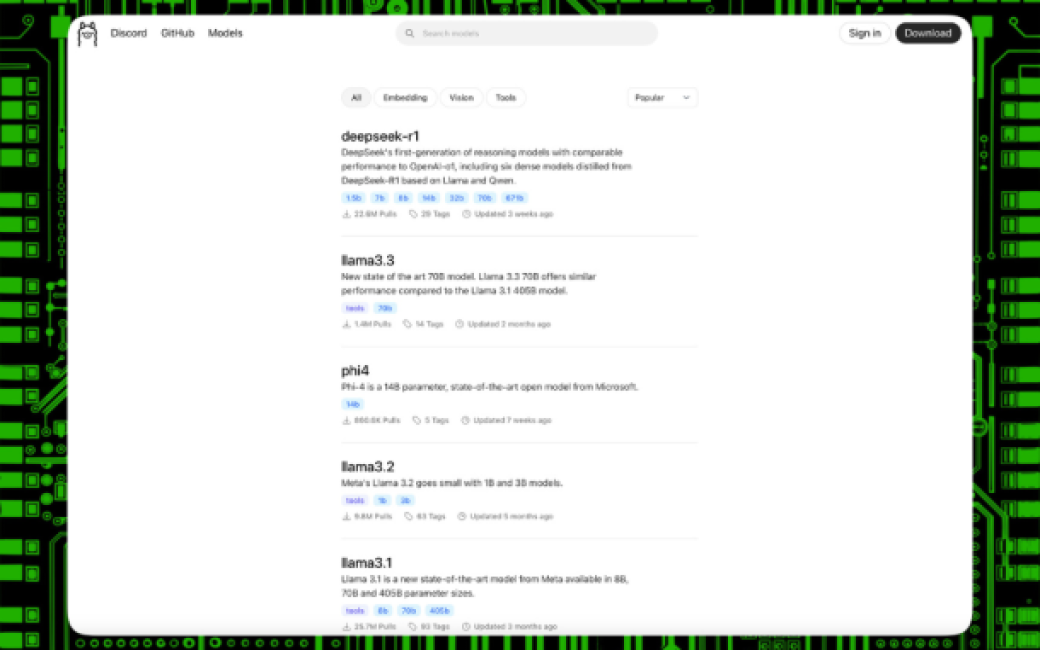

Another key advantage is that it offers several ready-to-install artificial intelligence models. This allows you to choose between different sizes to suit the PC you have at home or in your office. For example, you can try DeepSeek-R1 in its 7B version. That is, 7 billion parameters. It takes up 4.7 GB and requires a PC with 8 GB of RAM to run. Or, if you want to try its most complete version, version 671B, or 671 billion parameters, you will need a PC with 404 GB of storage and more than 32 GB of RAM. The same applies to Llama, Phi, and Gemma. Depending on the version you choose, the more complex it is, the more memory and storage space you will need.

The power of your graphics card also affects how quickly the installed artificial intelligence will respond. For an 8B parameter AI model, you will need a GPU with 4 GB of VRAM. And 16 GB of VRAM if you install a more complex model with 32B parameters. To run DeepSeek-R1 671B, the most complete version, you need several GPUs and more than 1 TB of VRAM. Keep in mind that companies like Microsoft, Amazon, Meta, and OpenAI don’t spend so much money on data centres and servers to run their artificial intelligence models for no reason. More complex models require more powerful machines.

Installing AI locally on your computer

The only complexity of installing artificial intelligence on your PC using Ollama is that everything is done with commands, as it does not have a graphical interface with drop-down menus or buttons. But this does not have to be a problem. On the contrary, doing away with an interface allows Ollama to be lighter and devote resources to the AI, which requires all the power of your computer.

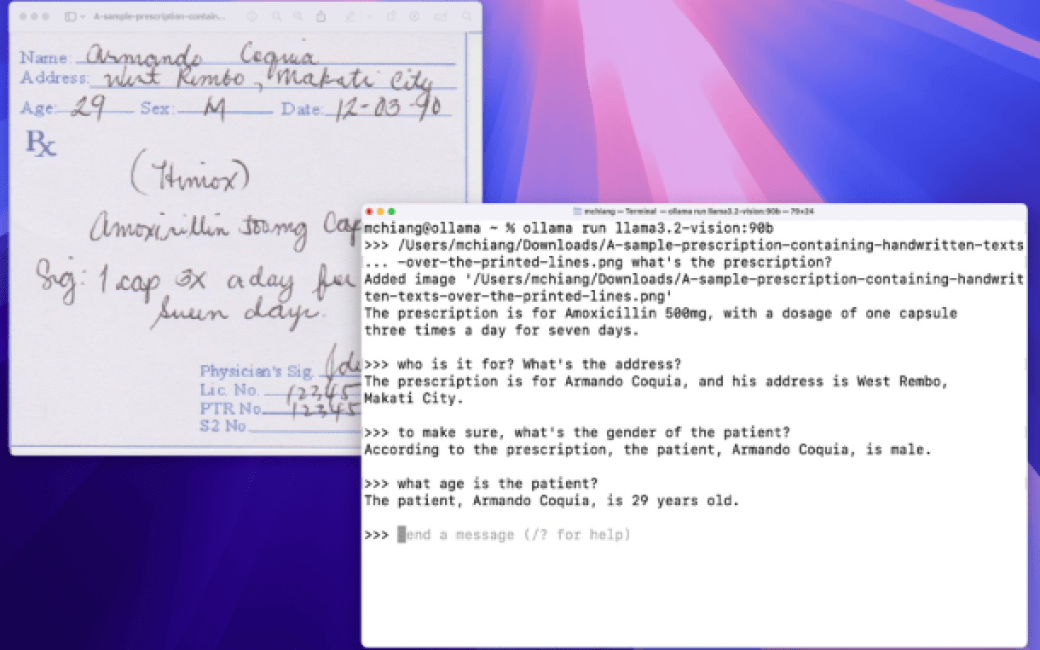

So the first thing we will do is download and install Ollama. As we saw earlier, it has installers for Windows, macOS, and Linux. Once installed, we’ll need to open the terminal, command line, PowerShell, or whichever app you use to run commands on your operating system. Then run the command ollama -v to check that it’s installed.

Step two. Choose which artificial intelligence model you want to install. At the time of writing, you can choose between DeepSeek, Llama, Phi, Mistral, Qwen, Gemma, and other models, some of which are adaptations of Llama for specific tasks or smaller versions that work on any computer. Each AI model has its own explanatory sheet so you can learn more about that model, its parameters, etc. In this document, you will find a list of the most popular AI models, how many parameters you can use them with, the disk space they occupy, and the command needed to install them.

Once you know which model you want, to install that artificial intelligence on your PC, simply enter the appropriate command. For example, ollama run deepseek-r1:8b to install DeepSeek-R1 in its 8B version. If everything goes well, you will see that the terminal or command line displays information about the download: percentage complete, amount downloaded, total amount to download, download speed, estimated time, etc.

After downloading, Ollama will install that AI model and launch it so you can start using it right away. If everything goes well, you will see the word “success” appear in the terminal. And below that, a message indicating you can now interact with the installed AI you have installed. Ollama works just like an AI assistant. You simply interact with the artificial intelligence via the command line. You can find more information in the official Ollama documentation.